The Public Cloud Drive to Lowest-Latency Infrastructure

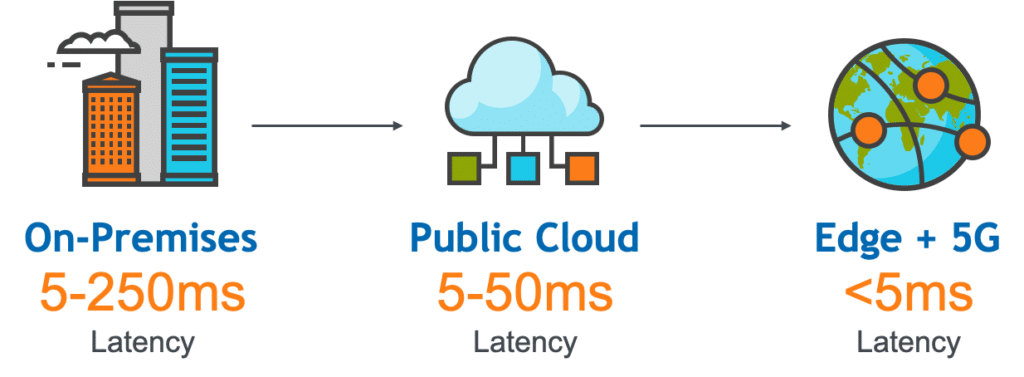

We are in the midst of a massive transformation for how computing is going to be delivered. We’re moving on from the data center in droves, to mainstream acceptance of the public cloud, and now we’re moving relentlessly toward computing at the mobile edge. Each of these computing “eras” is associated with lesser latency; beyond about 50ms the user experience really deteriorates, which causes a host of problems. Today there is wide recognition that the traditional data center is a rigid, high latency environment – especially problematic for people who are remote from it. In contrast, the vast, global coverage of the big cloud players has taken a big bite out of latency, reducing it such that even power users who rely on graphics-intensive applications can flourish in practically any work setting. Plus, the elasticity of the public cloud brings new levels of flexibility to organizations operating in highly dynamic industries. The next big step in the march to ever lower latency is to the cloud edge, which will enable new use cases requiring even faster data processing.

How Low Can We Go?

On stage at Amazon’s inaugural re:Mars artificial-intelligence and robotics event, Jeff Bezos, CEO of Amazon said, “It’s interesting, I do get asked quite frequently what’s going to change in the next 10 years. One thing I rarely get asked is probably even more important — and I encourage you to think about this — it’s the question: What’s not going to change in the next 10 years. The answer to that question can allow you to organize your activities. You can work on those things with the confidence to know that all the energy you put into them today is still going to be paying you dividends 10 years from now.”

In the world of end user computing performance, the need for lower and lower latency is one of those things that won’t change. The lower the latency, the better the application performance, the faster the data processing, the higher the productivity – bringing us ever-faster outcomes, whatever it is we might be creating or analyzing.

Let’s take a closer look at what is driving the transformation toward the lowest-latency infrastructure.

The Data Center Era (1970-2015)

For as long we have known server side computing, it has been delivered from a data center, either owned by a company or operated out of a co-location facility. All software – databases, application server, web servers, load balancers, VDI, SAP, etc. – were all designed for this data center view of the world. Most mid-sized companies operated one data center close to their headquarters. Most large companies operated 3-4 data centers in the large geos – North America, EMEA, and APAC. And the software solution stacks were replicated in each data center. End users who were close to the data center experienced good performance, but for end users who were far from the data center(s), performance depended on the latency and the quality of their WAN links. Because of this, during the data center era companies invested billions of dollars in WAN acceleration technology, such as Riverbed, to improve performance for remote users.

The Public Cloud Era (2014-)

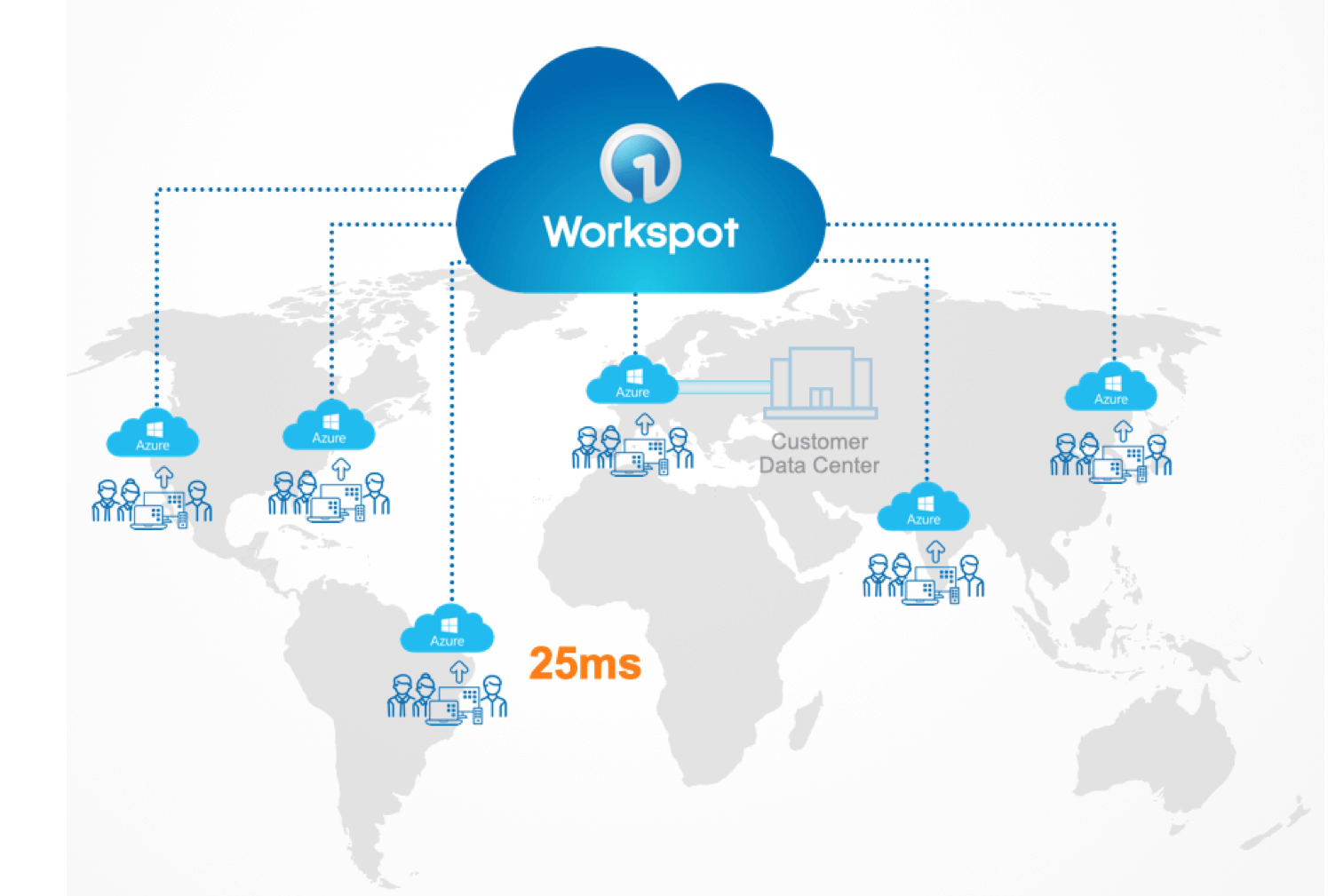

SaaS applications such as Salesforce, Workday, and ServiceNow ushered in a new application delivery model. SaaS vendors operate the application for customers instead of each customer having to operate it themselves. Infrastructure as a Service (IaaS) vendors such as Amazon Web Services introduced the enterprise to the public cloud model, where they could consume infrastructure, yet offload the management of it to the vendor. The most obvious benefits of the public cloud were greater agility and elasticity. IT could consume resources on-demand without having to wait months to spec, procure, and provision infrastructure. But IaaS also provided another, more nuanced but even more powerful benefit: Each customer gains access to tens of regions in which they can deploy infrastructure. No longer is infrastructure availability limited to 1-3 regions for companies. With public cloud global ubiquity, they have the ability to deploy software in tens of regions around the world without even lifting another finger. Now, this massive public cloud scale isn’t very useful for legacy software that was designed for the data center era, because it cannot take advantage of this globally distributed architecture. But there is a new generation of software – CockroachDB, Spanner, CosmosDB (databases), Workspot (VDI) are examples – that is built for this distributed world. And the resulting reduction in latencies from hundreds of milliseconds to tens of milliseconds is transforming the end user experience and therefore improving business productivity and opening doors to new opportunities for growth.

The Mobile Edge Era (2019-)

Yet with the public cloud, latency is still typically in the 5-25ms range. With the advent of 5G enabling cellular connectivity with sub-5ms latencies, there is a rush to combine low-latency compute at the mobile edge. Amazon has announced AWS Local Zones in Los Angeles and AWS Wavelength with Verizon, KDDI, Vodafone and SK Telecom. Microsoft has announced Azure Edge Zones and Azure Edge Zones with AT&T, Telefonica, NTT, SK Telecom, and other carriers, and Google has announced GCP Mobile Edge Cloud with AT&T. We are still early in our discovery of all the use cases enabled by this lower-low-latency compute; there are so many opportunities emerging with immersive experiences, mobile gaming, self-driving vehicles and more – and as these infrastructure capabilities are more widely available, a new generation of applications will be created.

We know that the lower the latency, the better the user experience. That’s never going to change. As Jeff Bezos recommended, let’s try to optimize for that world!

Want to learn more about Workspot low-latency cloud PCs? Schedule a demo and let’s explore your requirements.